How I Got ComfyUI Cold Starts Down to Under 3 Seconds on Modal

January 25, 2024 · ⏱️

TL;DR

You can start comfy via bypassing the initialization time with modal’s snapshotting feature under 3 seconds. Check the github repository here.

How I Got ComfyUI Cold Starts Down to Under 3 Seconds on Modal

Hey folks! I’ve been tinkering with ComfyUI deployments on Modal lately and wanted to share a cool optimization I stumbled upon. If you’ve ever deployed ComfyUI as an API, you know those cold starts can be a real pain - waiting 10-15 seconds before your container is ready to process requests isn’t exactly ideal.

The Cold Start Problem

I was running into this issue with a project where I needed to scale ComfyUI containers quickly. Looking at the logs, I could see where all that time was going:

Loading Python deps: ~1s

Initializing PyTorch and CUDA: ~7s

Loading custom nodes: ~2-5s (or much more with many custom nodes)For a single container, that’s annoying. For an API that needs to scale up and down frequently? It’s a showstopper.

Memory Snapshots to the Rescue?

I knew Modal had this memory snapshotting feature that could potentially help, but there was a catch - it only works with CPU memory, not GPU. And ComfyUI wants to initialize CUDA devices right away during startup.

So I thought, “What if I could trick ComfyUI into thinking there’s no GPU during initialization, then give it access to the GPU after the snapshot is created?”

The Hack

I ended up creating an ExperimentalComfyServer class that does exactly that. Here’s the gist of it:

@contextmanager

def force_cpu_during_snapshot(self):

import torch

# Save original functions

original_is_available = torch.cuda.is_available

original_current_device = torch.cuda.current_device

# Lie to PyTorch - "No GPU here, sorry!"

torch.cuda.is_available = lambda: False

torch.cuda.current_device = lambda: torch.device("cpu")

try:

yield

finally:

# Restore the truth

torch.cuda.is_available = original_is_available

torch.cuda.current_device = original_current_deviceThen I run ComfyUI directly in the main thread instead of as a subprocess, which eliminates some overhead and gives me more control:

# Instead of launching a subprocess, we run ComfyUI directly

self.executor = CustomPromptExecutor()The final piece was integrating this with Modal’s snapshotting:

@app.cls(image=image, gpu="l4")

class ComfyWorkflow:

@enter(snap=True) # This is the magic line

def run_this_on_container_startup(self):

self.server = ExperimentalComfyServer()

# Server initialization happens hereThe Results

I was honestly surprised by how well this worked. After the first container creates the snapshot (which still takes the usual 10-15 seconds), subsequent containers start in under 3 seconds. That’s a 4-5x improvement!

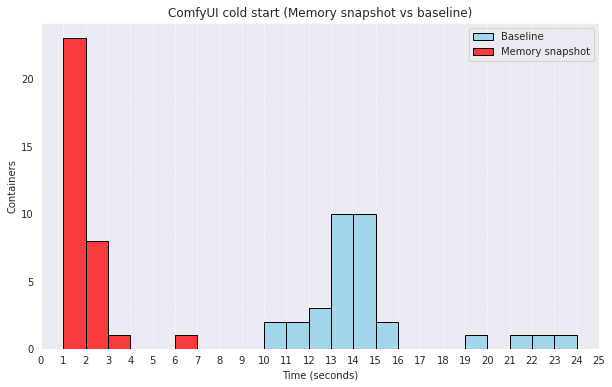

Here’s what my cold start distribution looked like:

- Without snapshotting: Most containers took 12-15 seconds to start

- With snapshotting: Almost all containers started in under 3 seconds

For my use case, this was a game-changer. I could now scale up containers quickly in response to traffic spikes without users experiencing long wait times.

What Else Is In This Repo?

While working on this cold start optimization, I ended up building a more comprehensive framework for deploying ComfyUI workflows as APIs on Modal. Here’s what’s included:

API Endpoints Out of the Box

I followed RunPod’s mental model for the API endpoints, which I’ve found to be really intuitive for serverless inference. The endpoints are:

/infer_sync - Run a workflow and wait for results

/infer_async - Queue a workflow and get a job ID

/check_status/{job_id} - Check if a job is done

/cancel/{job_id} - Cancel a running jobOne thing that bothered me with Modal is that you have to implement the canceling functionality yourself, and it’s not very obvious how to do it from reading the documentation. After digging through the docs and some trial and error, I ended up with this implementation:

@web_app.post("/cancel/{call_id}")

async def cancel(call_id: str):

function_call = functions.FunctionCall.from_id(call_id)

function_call.cancel()

return {"call_id": call_id}Having these endpoints ready to go makes it super easy to integrate with frontend applications or other services.

Dynamic Prompt Construction

One of the trickier parts of working with ComfyUI programmatically is constructing those complex workflow JSONs. I built a simple system that lets you:

- Export a workflow from the ComfyUI UI

- Define which parts should be dynamic in your API

- Map API parameters to specific nodes in the workflow

It looks something like this:

def construct_workflow_prompt(input: WorkflowInput) -> dict:

# Load the base workflow

with open("/root/prompt.json", "r") as file:

workflow = json.load(file)

# Map API inputs to workflow nodes

values_to_assign = {"6.inputs.text": input.prompt}

assign_values_if_path_exists(workflow, values_to_assign)

return workflowEfficient Model Management

Loading models is another pain point. I used Modal’s volume system to download models directly to volumes, which means:

- Models aren’t included in the Docker image (keeping it small)

- Models are shared between containers

- You only download models once

async def volume_updater():

await HfModelsVolumeUpdater(models_to_download).update_volume()

image = get_comfy_image(

volume_updater=volume_updater,

volume=volume,

)Custom Node Support

ComfyUI’s ecosystem of custom nodes is one of its strengths, so I made sure to support them. The snapshot.json file lets you specify which custom nodes to install:

{

"comfyui": "4209edf48dcb72ff73580b7fb19b72b9b8ebba01",

"git_custom_nodes": {

"https://github.com/WASasquatch/was-node-suite-comfyui": {

"hash": "47064894",

"required": true

}

}

}How To Use It

If you want to try this out yourself:

- Clone the repo:

git clone https://github.com/tolgaouz/modal-comfy-worker.git - Export your ComfyUI workflow to

prompt.json - Update

prompt_constructor.pyto map your API inputs - Deploy to Modal:

modal deploy workflow.py

For the experimental cold start optimization specifically, check out the examples/preload_models_with_snapshotting folder.

Final Thoughts

This project started as a simple attempt to speed up ComfyUI cold starts, but evolved into something more comprehensive. I’m still tweaking things and adding features, but it’s already been super useful for my own projects.

If you’re working with ComfyUI and Modal, give it a try and let me know what you think. And if you have ideas for improvements or run into issues, feel free to open an issue or PR on GitHub.

Note: The experimental memory snapshotting feature works great for my use cases, but I haven’t tested it with every possible ComfyUI custom node or workflow. Your mileage may vary, especially with complex custom nodes that do unusual things during initialization. Always test thoroughly before deploying to production!